Download Complete White Paper in PDF Format

Challenges of Sensor Fusion and Perception for ADAS/AD

Sensor fusion is the merging of data from at least two sensors. Perception refers to the processing and interpretation of sensor data to detect, identify and classify objects. Sensor fusion and perception enables an autonomous vehicle to develop a 3D model of the surrounding environment that feeds into the vehicle control unit. Today’s available perception solutions use architectures and sensor data fusion methods that limit their performance, scalability, flexibility, and reliability. Let’s look into these challenges into more details.

Existing Sensor Fusion and Perception Solutions

Current sensor fusion solutions perform object-level fusion wherein each sensor (e.g., radar, camera, LiDAR) with its inherent limitations identifies and classifies objects individually. This results in poor performance and is not optimal because no single sensor is capable by itself of detecting all objects under all conditions. Furthermore, when sensor data is not fused, the system may get contradicting inputs from sensors and is unable to determine with a degree of certainty on the next action.

Existing sensor fusion and perception solutions are camera-centric and possess limited ability to incorporate other sensor architectures within the same platform. This results in poor performance, lack of scalability and reliability and hardware dependency.

The Way Forward for Sensor Fusion and Perception

To accelerate ADAS and AD adoption, sensor fusion and perception solutions must provide the requisite performance, flexibility, scalability and reliability.

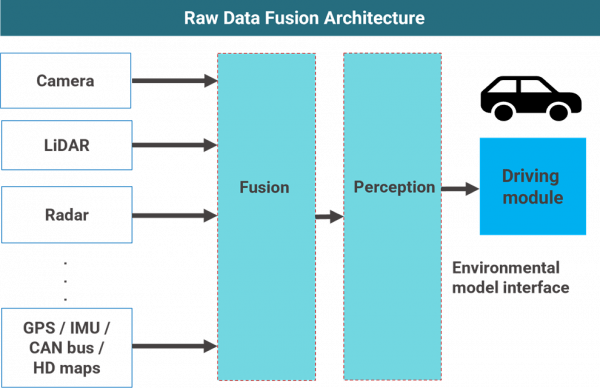

Performance: Higher autonomy levels demand higher levels of accuracy and precision in object detection, classification and tracking and environmental modeling. The preferred technique to achieve this is raw data sensor fusion, which combines raw data from all available sensors on the vehicle and provides rich data for a perception system to accurately detect and classify an object.

Flexibility: While traditional and existing perception solutions are camera-centric, future perception solutions need the capability to incorporate other sensor architectures on the same platform based on the level of autonomy desired. Solutions must be able to work with any sensor set without the need to rewrite perception algorithms.

Scalability: Vehicles with varying autonomy levels will co-exist as ADAS and AD continue to evolve in the future. The ability to build sensor fusion and perception solutions for L2 to L5 on a common platform is critical to ensure consistency in technical specifications, product quality and driving experience. A scalable perception solution accelerates ADAS and AD adoption.

Reliability: Confidence in autonomous solutions is based on safety and performance. Sensor fusion and perception solutions must pass stringent quality and performance requirements.

What Is LeddarVision?

LeddarVision™ is a raw data sensor fusion and perception platform that generates a comprehensive 3D environmental model with multi-sensor support for camera, radar and LiDAR configurations for advanced driver assistance systems (ADAS) and autonomous vehicles.

The LeddarVision Advantage

Performance advantage: LeddarVision’s raw data sensor fusion capabilities, sensor synchronization and upsampling underlie LeddarVision’s superior object detection performance, resulting in an accurate 3D RGBD environmental model. Of all the submissions made between 2019 and 2021 to nuScenes™, LeddarVision’s RCF360v2 is the top-ranking radar/camera solution for 3D object detection. LeddarVision’s upsampling technique provides richer environmental data, which results in:

- Accurate and precise detection

- Resolution of conflicting sensor inputs

- Built-in redundancy

Flexibility advantage: LeddarVision is a hardware-agnostic sensor fusion and perception solution that decouples the software from hardware and provides opportunities for OEMs and Tier 1-2 automotive suppliers to develop multiple sensor architectures on a common platform and reduce hardware costs by diversifying hardware sourcing.

Scalability advantage: LeddarVision’s ability to integrate with multitudes of sensor architectures, raw data sensor fusion capabilities, upsampling techniques and deep learning algorithms enable LeddarVision to provide superior performance at L2 as well as higher autonomy levels.

Reliability advantage: LeddarVision has built-in redundancy and a safety health module within its software stack. LeddarTech’s robust quality management system follows guidelines towards achieving functional safety (FuSA), safety of intended functionality (SOTIF) and cybersecurity. LeddarTech’s domain expertise, strong work processes and robust quality management systems are geared towards ushering in an era of reliable and safe ADAS and AD.

This White Paper does not constitute a reference design. The recommendations contained herein are provided “as is” and do not constitute a guarantee of completeness or correctness. LeddarTech® has made every effort to ensure that the information contained in this document is accurate. Any information herein is provided “as is.” LeddarTech shall not be liable for any errors or omissions herein or for any damages arising out of or related to the information provided in this document. LeddarTech reserves the right to modify design, characteristics and products at any time, without notice, at its sole discretion. LeddarTech does not control the installation and use of its products and shall have no liability if a product is used for an application for which it is not suited. You are solely responsible for (1) selecting the appropriate products for your application, (2) validating, designing and testing your application and (3) ensuring that your application meets applicable safety and security standards. Furthermore, LeddarTech products are provided only subject to LeddarTech’s Sales Terms and Conditions or other applicable terms agreed to in writing. By purchasing a LeddarTech product, you also accept to carefully read and to be bound by the information contained in the User Guide accompanying the product purchased.

To learn more, watch this video and explore these useful resources.