Download Complete White Paper in PDF Format

White Paper – Neural networks are critical and a pre-requisite in the development of advanced driver assistance systems. These systems are used for driving tasks such as localization, path planning, and perception. In this white paper, we explore what neural networks are, how they function, and the various techniques used for object detection and classification for perception systems.

Neural networks are a subset of machine learning and artificial intelligence, inspired in their design by the functioning of the human brain. They are computing systems that use a series of algorithms to produce an output based on input data. These algorithms are expressed as mathematical functions. One of the most significant advantages of neural networks in advanced driver assistance systems is their ability to handle a wide range of situations. As these neural networks and machine learning models are trained and exposed to varied data, they can become self-sufficient to operate independently and handle similar but previously unseen circumstances.

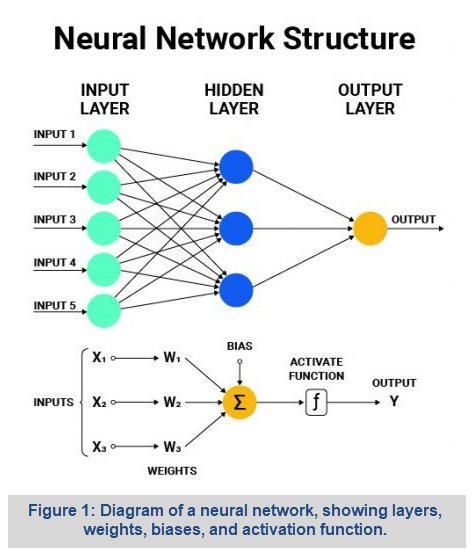

A feed-forward neural network is one of the many types of neural network, some others being convolutional neural network (CNN) and recurrent neural network (RNN). Neural network is a system that delivers a best-fit output based on input data, optimizing variables to minimize error. The neural network tunes its parameters (aka variables) repeatedly until it produces an output that results in minimal error. This variable tuning is performed by the hidden layers of the network. Hidden layers represent the series of algorithms and activation functions that optimize the variables to provide the output. The cost function quantifies the error between the produced and true output. Although cost function is not covered in this white paper, read the technical note to learn more on this.

Each neuron in a neural network has a value assigned to it, and the line connecting neurons in different layers is known as weightage. The strength of the relationship between two neurons is represented by the magnitude of the weight. The activation function processes the neuron value and its associated weightage to determine the value of a neuron in the next layer. Neural networks are first trained and then tested. The training of a neural network is critical as this is how the network optimizes its variables so that the output error is minimized. During training, the network is fed with input data and tasked to provide an output. The resulting output is compared against the correct output and the difference is fed back into the system, enabling the neural network to optimize the variables to minimize error.

Each neuron in a neural network has a value assigned to it, and the line connecting neurons in different layers is known as weightage. The strength of the relationship between two neurons is represented by the magnitude of the weight. The activation function processes the neuron value and its associated weightage to determine the value of a neuron in the next layer. Neural networks are first trained and then tested. The training of a neural network is critical as this is how the network optimizes its variables so that the output error is minimized. During training, the network is fed with input data and tasked to provide an output. The resulting output is compared against the correct output and the difference is fed back into the system, enabling the neural network to optimize the variables to minimize error.

There are two techniques to train neural networks: supervised learning and unsupervised learning. Supervised learning refers to providing a training dataset in which an input is provided to the network and the correct answer is labeled. During training, the neural network adjusts the weights and biases to improve its performance on the training data and reduce error. The networks are trained on large and varied datasets, with the aim that what the network learns during this training exercise by changing the weights and biases can be applied to new and unseen data to deliver the correct output. An example of supervised neural network training is by teaching the network that ‘1+1=2, 1+2=3, and 1+3=4’. Then the aim is for the network to correctly output the answer to ‘What is 2+3?’. Another method of training neural networks is unsupervised learning, wherein the correct output is not manually provided by a human but rather by an automated system that can accurately and reliably provide the correct output for comparison against produced output.

Machine learning and neural networks are used for various driving tasks such as localization, object behavior prediction, decision making, path planning and environmental perception. Convolutional neural networks (CNNs) are the cornerstone in computer vision and used extensively for camera based perception wherein CNNs are used for

object detection and classification. Sensor architecture for entry-level ADAS consists of radars and cameras for front- and surround-view systems. Higher autonomy levels will likely add LiDARs to the sensor mix. Radars and LiDARs utilize machine learning and neural networks for object detection and classification by training the network on point clouds.

LeddarVision, LeddarTech’s low-level sensor fusion and perception software, combines AI and computer vision technologies and deep neural networks with computational efficiency to scale up the performance of ADAS/AD sensors and hardware, which are essential to enable safe and reliable ADAS and AD. LeddarTech enables customers to reap even greater performance and cost rewards as the platform is scalable and sensor-agnostic, due to its raw data fusion technology, unlike other solutions on the market. As a result, the customer controls the design by determining any camera, radar or LiDAR suite that best meets their application and performance requirements.

Download Complete White Paper in PDF Format

This White Paper does not constitute a reference design. The recommendations contained herein are provided “as is” and do not constitute a guarantee of completeness or correctness. LeddarTech® has made every effort to ensure that the information contained in this document is accurate. Any information herein is provided “as is.” LeddarTech shall not be liable for any errors or omissions herein or for any damages arising out of or related to the information provided in this document. LeddarTech reserves the right to modify design, characteristics and products at any time, without notice, at its sole discretion. LeddarTech does not control the installation and use of its products and shall have no liability if a product is used for an application for which it is not suited. You are solely responsible for (1) selecting the appropriate products for your application, (2) validating, designing and testing your application and (3) ensuring that your application meets applicable safety and security standards. Furthermore, LeddarTech products are provided only subject to LeddarTech’s Sales Terms and Conditions or other applicable terms agreed to in writing. By purchasing a LeddarTech product, you also accept to carefully read and to be bound by the information contained in the User Guide accompanying the product purchased.

E-Book – This complimentary e-book explains the main features and components of the LeddarVision sensor fusion and perception solution for automotive and mobility ADAS and autonomous driving.

White Paper – This post introduces to new car assessment programs (NCAP), the role played in enabling road safety and the various NCAP programs across the world, with a specific focus on how the U.S. and Europe have embraced technology in their new car assessment programs and the growing importance of automated driver assistance systems (ADAS).