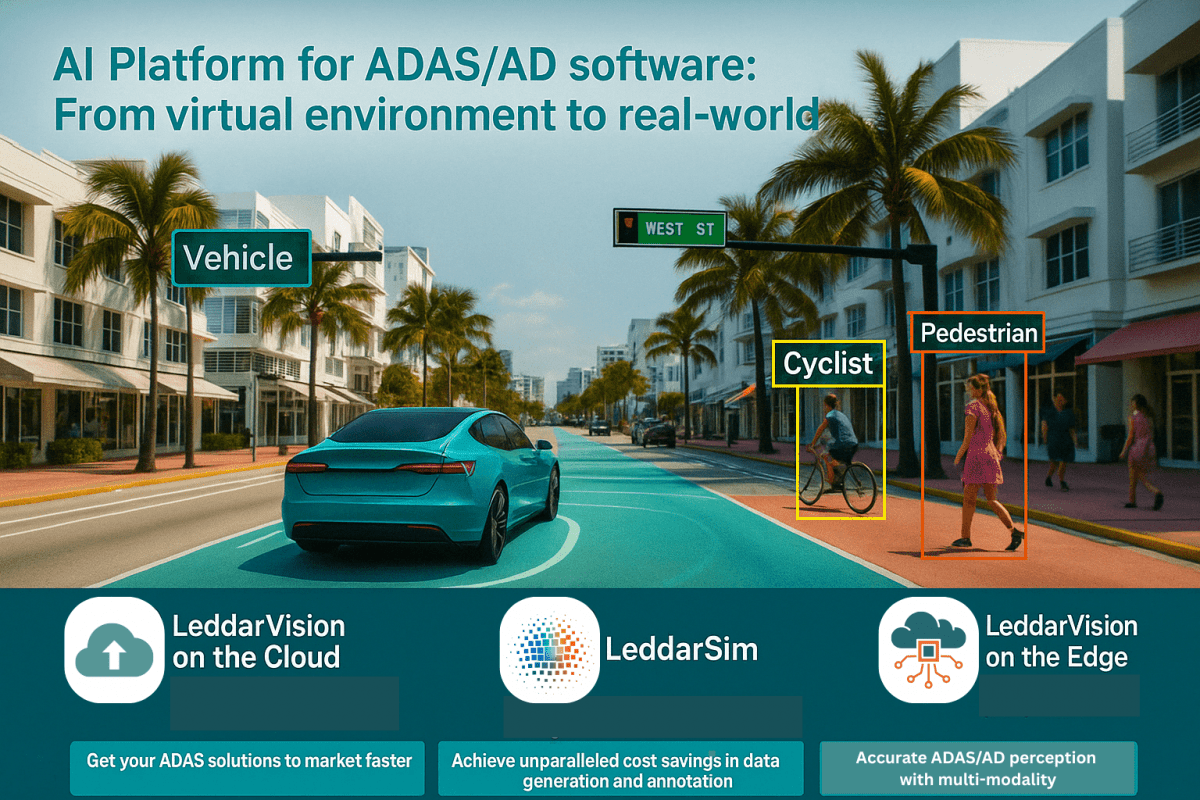

LeddarSim – Simulation for the Future of Mobility

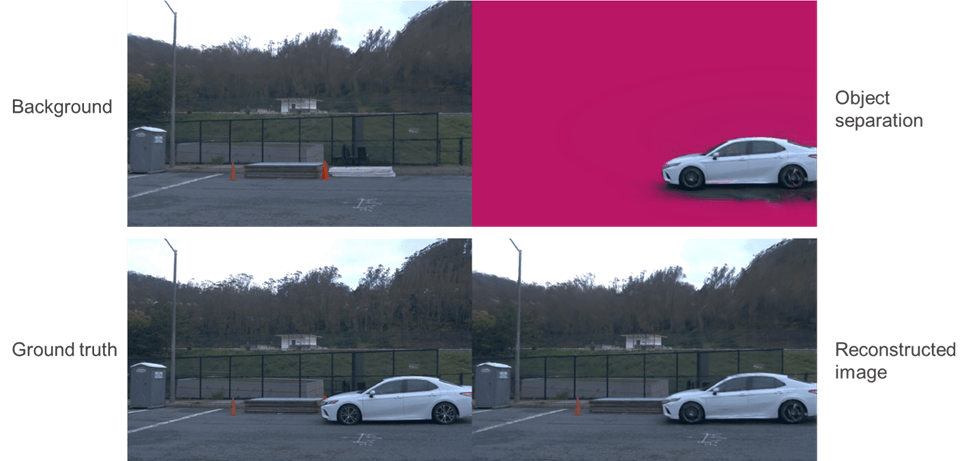

LeddarSim™ is an advanced ADAS and autonomous driving simulator designed to reduce the simulation-to-reality gap. It reconstructs real-world driving scenarios using neural reconstruction techniques like Gaussian splatting, enabling real-time, photorealistic rendering from multi-modal sensor data including cameras, radars and LiDAR.

Accelerate Time-to-Market

Test millions of configurable scenarios virtually—reducing development cycles and speeding up validation.

Cut Costs, Not Corners

Lower the cost per simulation frame by over 90% compared to real-world testing, while increasing accuracy.

Design Once. Deploy Anywhere.

Easily adapt sensor setups, vehicle types and regional driving conditions to scale development across platforms.

Data-Driven Simulation

Build realistic scenarios directly from real-world data—not synthetic environments—for greater accuracy and relevance.

Multi-Modal Sensor Support

Simulate LiDAR, radar and camera data together to optimize and validate multi-sensor perception systems.

Cloud-to-Edge Workflow

Run simulations in the cloud or on-prem, with a seamless path from development to edge deployment.

Advanced Scenario Editor

Create and modify complex driving scenarios—quickly testing edge cases and custom conditions at scale.

Reusable Data Assets

Store and adapt scene components for future projects, reducing the need for repeated data collection and labeling.

Sensor Configuration Testing

Test different sensor placements, specs and combinations across diverse vehicle types.

Actor Simulation

Insert rare or under-represented road users like animals, cyclists or emergency vehicles.

Environmental Variables

Simulate changing conditions—weather, signage, construction zones and more.

Ego-Vehicle Behavior

Model lane changes, speed shifts and trajectory adjustments in real-world road layouts.

Real-world data collection remains a major bottleneck in ADAS and autonomous system development. Gathering diverse driving data is time-consuming, expensive and often unsafe—especially when trying to capture rare or high-risk edge cases like low-visibility scenarios, unexpected pedestrian behavior or complex traffic interactions.

Digital twin-based simulations were introduced to address these challenges, but they come with their own limitations. These systems are expensive to build, difficult to scale, and often fail to replicate the full fidelity of real sensor behavior—leading to a gap between virtual testing and real-world performance.

By combining real-world data with AI-powered reconstruction techniques like neural radiance fields (NeRF) and Gaussian splatting, LeddarSim generates high-fidelity, fully reconfigurable 3D scenes. Developers can simulate complex scenarios with true-to-life detail—without the delays and costs of physical testing.

Autonomous-driving developers invariably hit a wall at Level 3 (L3) autonomy and above due to three core challenges:

Intractable corner cases: The vast number of rare events encountered in routine driving makes exhaustive field validation impossible.

Manual data workflows: Hand-crafted data collection and annotation slow down development cycles and drive up costs.

Sensor-configuration silos: Datasets tied to specific vehicle sensor setups cannot be generalized or shared across platforms.

LeddarSim delivers a transformative solution:

Close-to-zero-simulation gap: Provides end-to-end training and validation in a unified simulated environment, ensuring fidelity to real-world conditions.

No 3D environment modeling required: Automatically generates realistic scenarios without the overhead of manual scene reconstruction.