Superior Sensor Fusion

Enabling Safer Navigation

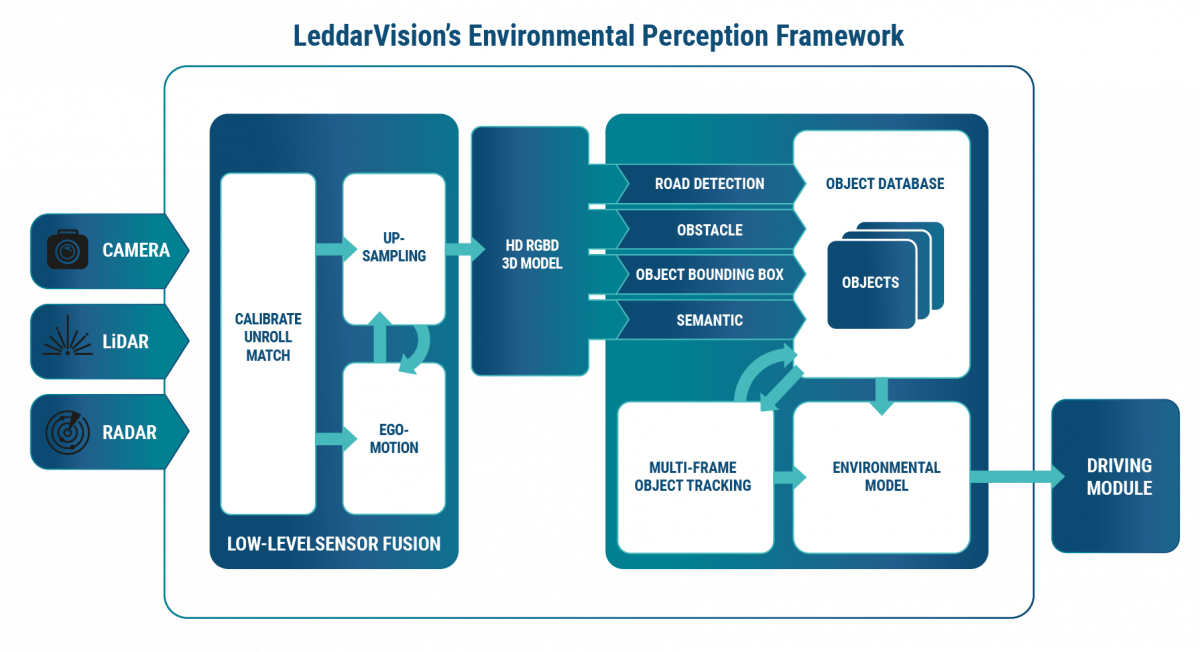

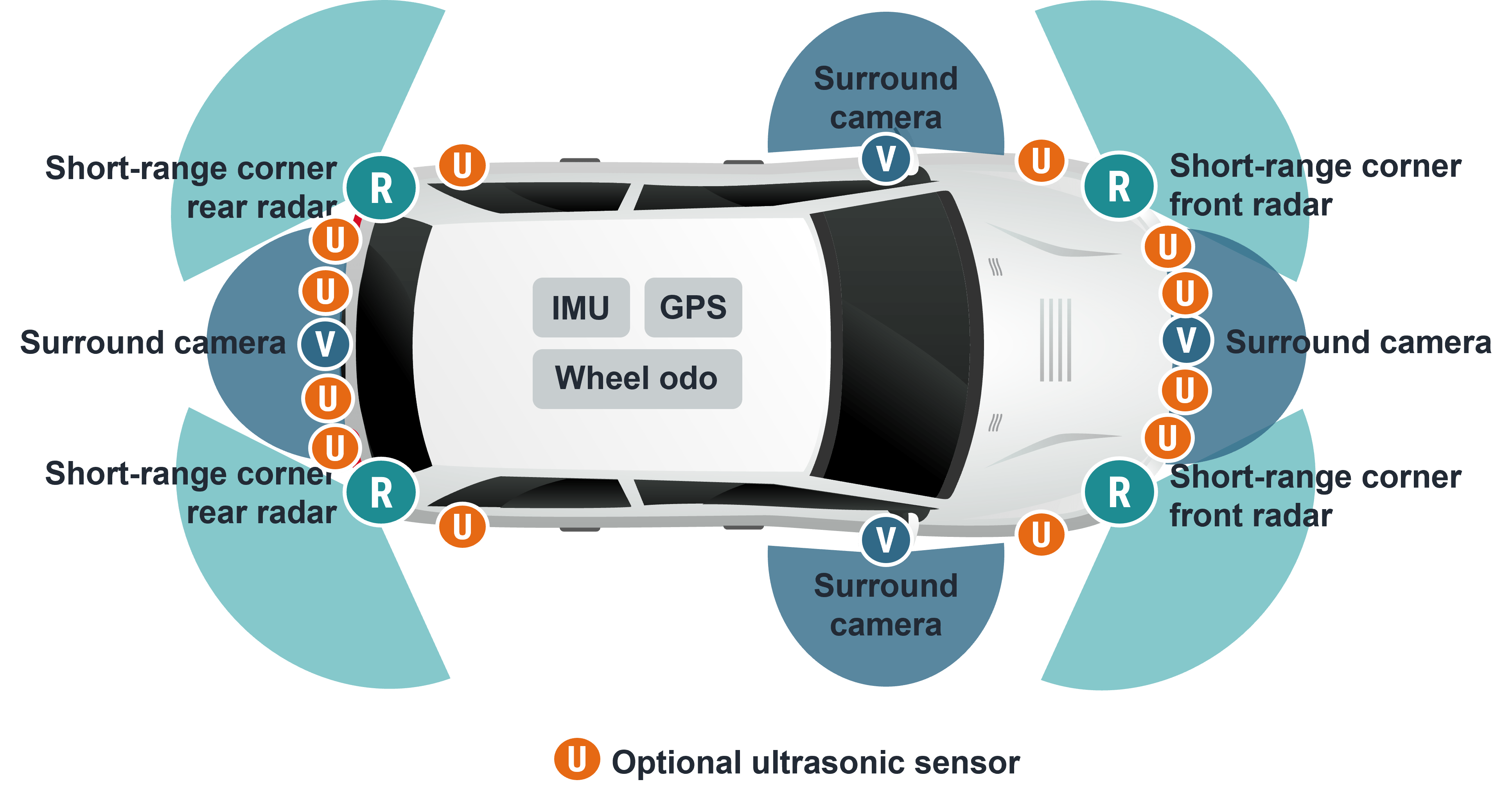

Based on LeddarTech’s comprehensive and demonstrated sensor data low-level fusion expertise, LeddarVision’s AI based software processes sensor data at a low level to efficiently achieve a reliable understanding of the vehicle’s environment required for navigation decision making and safer driving. LeddarVision resolves many limitations of ADAS architectures based on legacy object-level fusion by providing:

- Scalability to AD/HAD

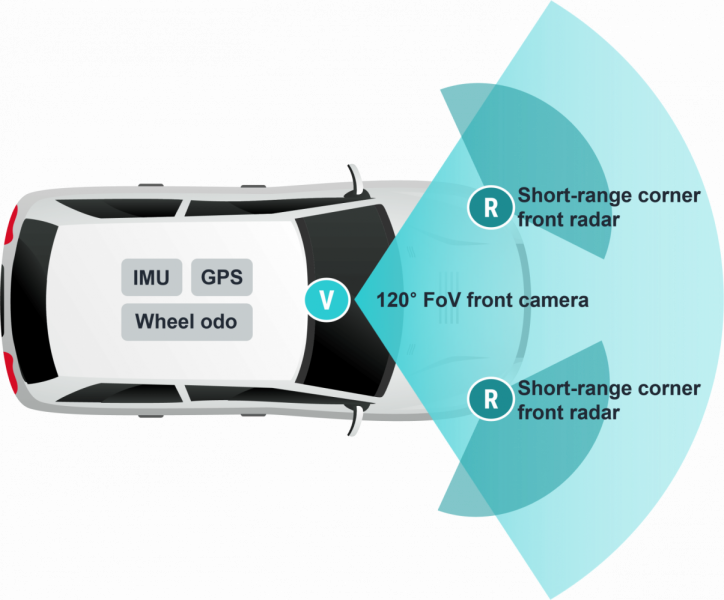

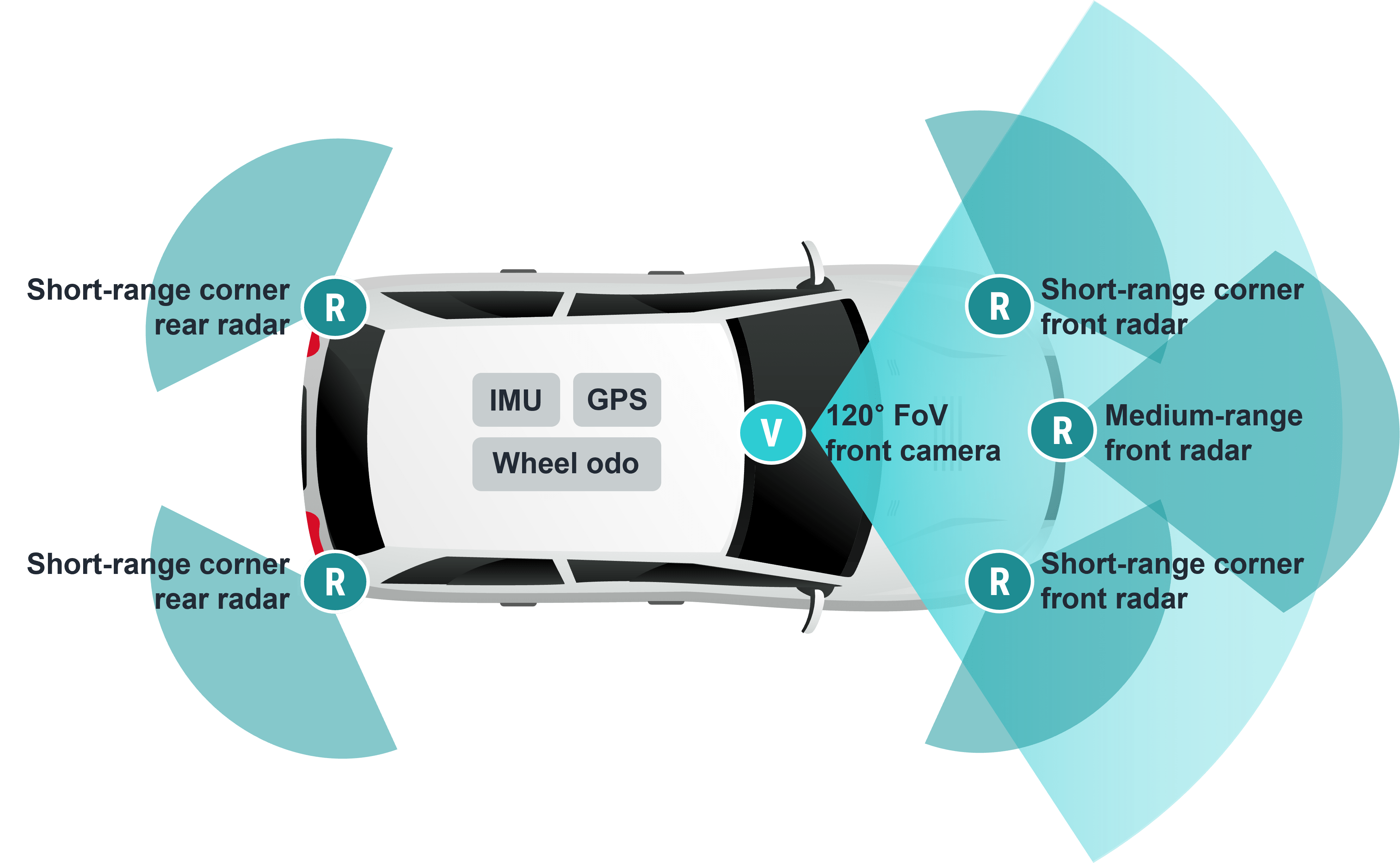

- Flexible modularity to effectively handle a growing variety of use cases, features and sensor sets

- Centralized, hardware-agnostic low-level fusion, which optimally fuses all sensors for higher and more reliable performance

Low-level sensor fusion utilizes information from all sensors for better and more reliable operation. As a result, this sensor data low-level fusion and perception solution provides superior performance, surpassing object-level fusion limitations in adverse scenarios like occluded objects, objects separation, camera/radar false alarms, blinding light (e.g., sun, tunnel) or distance/heading estimation.