Download Complete White Paper in PDF Format

Abstract

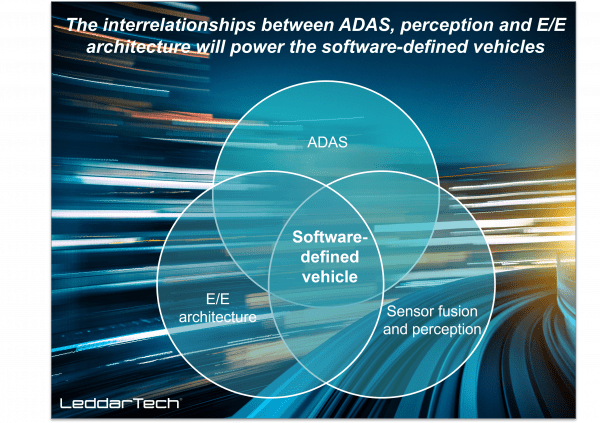

What will mobility look like in 2040? How ubiquitous will autonomous vehicles become? Advances in ADAS, over-the-air updates and vehicle-to-everything (V2X) communication promise a transformative future. There is little doubt that the future of mobility holds great promise. This vision is materializing today as automotive manufacturers and their Tier 1-2 suppliers pivot towards a software-defined future, laying the foundation for the forthcoming evolution in transportation. The impact of this shift will be felt by all: from ADAS developers looking to develop high-performance and reliable ADAS, to electrical/electronic (E/E) architects looking to minimize costs while building a scalable platform, to strategy and finance professionals who must anticipate the impact of their upcoming software-defined vehicles on market share.

This White Paper explains the ramifications of the shift towards a software-defined future for automotive OEMs and their Tier 1-2 suppliers, specifically describing the impact of advancements in ADAS/AD, sensor fusion and perception on the vehicles’ E/E architecture (EEA). In the following sections, we will look at the different types of EEA, the advantages and disadvantages of each, how ADAS and perception systems influence E/E design and the benefits that AI-based low-level sensor fusion and perception systems deliver.

The Three Types of E/E Architectures

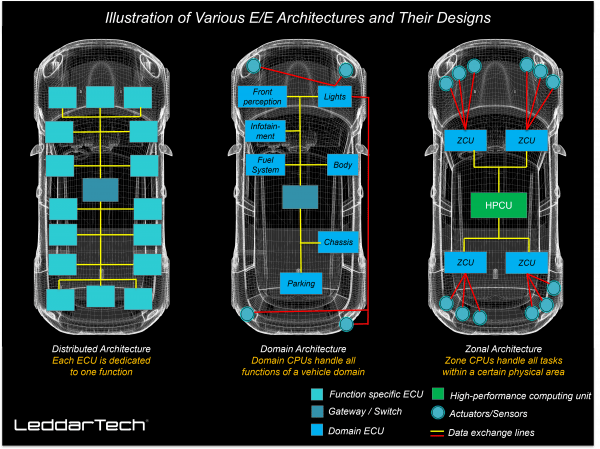

EEA refers to the design and layout of electrical and electronic systems within a vehicle. These architectures have evolved over the years to accommodate the increasing complexity and integration of various electronic components and systems in modern vehicles. EEA can be viewed from two perspectives: the physical domain and the data domain. The EEA as viewed from the physical domain illustrates the position and placement of hardware such as electronic control units (ECUs), sensors, actuators, gateways and power supply. EEA design through the data perspectives analyzes the data exchange, signals, communication network and interconnections among various components and processors in the vehicle.

Figure 1 – The interrelationships between ADAS, perception and E/E architecture will power the software-defined vehicles

1. Distributed E/E architecture: In a distributed architecture, electronic control units are distributed throughout the vehicle, each responsible for specific functions or subsystems. Communication between these ECUs occurs through a network, such as CAN (Controller Area Network). This architecture is common in older vehicle models. A distributed EEA features a high number of ECUs dedicated to specific tasks, cumbersome wiring and high system weight. In modern vehicles, wiring can account for as much as 20 percent of total EEA cost. One of the key advantages derived as a result of the shift towards a newer EEA is a reduction in wiring requirements, thereby cutting vehicle cost and weight. The wiring harness is the third heaviest component in the vehicle. The advantages of distributed EEA are modularity and redundancy for critical functions (failure in one area does not necessarily affect the entire system). However, distributed EEA is limited in scalability, suffers from complex wiring issues due to multiple ECUs and is plagued by limited bandwidth. As an example from an ADAS perspective in a distributed EEA, adaptive cruise control and front collision warning features would have separate ECUs.

2. Domain E/E architecture: This architecture is a hybrid approach that combines aspects of both distributed and centralized architectures. It organizes ECUs into domains based on their functions (e.g., powertrain, chassis and infotainment). Each domain has its own controller which manages the functions within that domain. The domain architecture can be viewed from the lens of data-domain or logic-domain in that certain functions are grouped. By way of example, infotainment, chassis, body, lights, cabin and driver monitoring systems would each have dedicated functional controllers (aka domain controllers). This architecture offers advantages, such as improved organization, system modularity and enhanced communication efficiency within specific domains. Even though this architecture reduces the number of ECUs and wiring requirements, the reduction tends to be small. For example, the chassis controller must still have wires connected to the four wheels for traction control.

3. Zonal E/E architecture: This architecture must be viewed through the physical domain. In a zonal EEA, the vehicle is divided into physical zones (such as rear right, rear left, center, front right and front left). All functions within that zone are handled by one computing power, which also serves as the communication gateway. Some key benefits of zonal architecture are reduced wiring requirements, improved communication and data transfer, over-the-air updates, faster software development and reduced costs and weight. However, latency and automotive security are key challenges when implementing this architecture.

Figure 2 – Various E/E architectures and their designs

How ADAS Influences E/E Architecture

The integration of ADAS has significantly impacted the design of EEA in modern vehicles. ADAS, which encompasses a wide range of safety and convenience features, relies heavily on sophisticated sensors, processors and communication systems, requiring deep learning accelerators for various functions. This influence of ADAS is evident in several key aspects of EEA design.

Firstly, ADAS necessitates sensor deployment throughout the vehicle. Cameras, radars, LiDARs, ultrasonic sensors and other advanced sensor technologies are strategically positioned to provide comprehensive coverage of the vehicle’s surroundings. While traditional surround-view systems utilize a 12-camera 5-radar (12V5R) sensor architecture, modern AI-based low-level sensor fusion surround-view systems use a 5-camera 5-radar (5V5R) sensor architecture. Consequently, EEA must accommodate the integration and processing of data from these diverse sensors, requiring robust data fusion techniques, perception output delivery and actuator control, high-speed communication networks and wiring integration. The EEA must also consider cybersecurity and ensure that ADAS systems are protected from unwanted influences.

Secondly, ADAS demands heavy processing and computing capabilities. The high computational requirements for real-time perception, decision making and control functions necessitate powerful processing units capable of swiftly analyzing vast amounts of sensor data. EEA needs to support high bandwidth communication networks that enable the rapid sharing of critical information across various vehicle systems. Integrated communication protocols ensure efficient data transmission and real-time coordination among different components, optimizing the performance and responsiveness of ADAS functionalities. Additionally, the EEA must support over-the-air updates to enhance the mobility experience through ADAS improvements and additions.

Thirdly, today’s EEA must be scalable and flexible to handle not only today’s needs but also those of the future as ADAS applications evolve with enhanced features and new sensor architectures.

Lastly, ADAS influences safety considerations in EEA design. Redundancy, fail-safes and safety-critical features become paramount to ensure that ADAS applications function reliably even in the event of a component failure. The EEA and perception system must accommodate redundant sensors, processors and communication pathways to maintain the system’s integrity and guarantee driver and passenger safety.

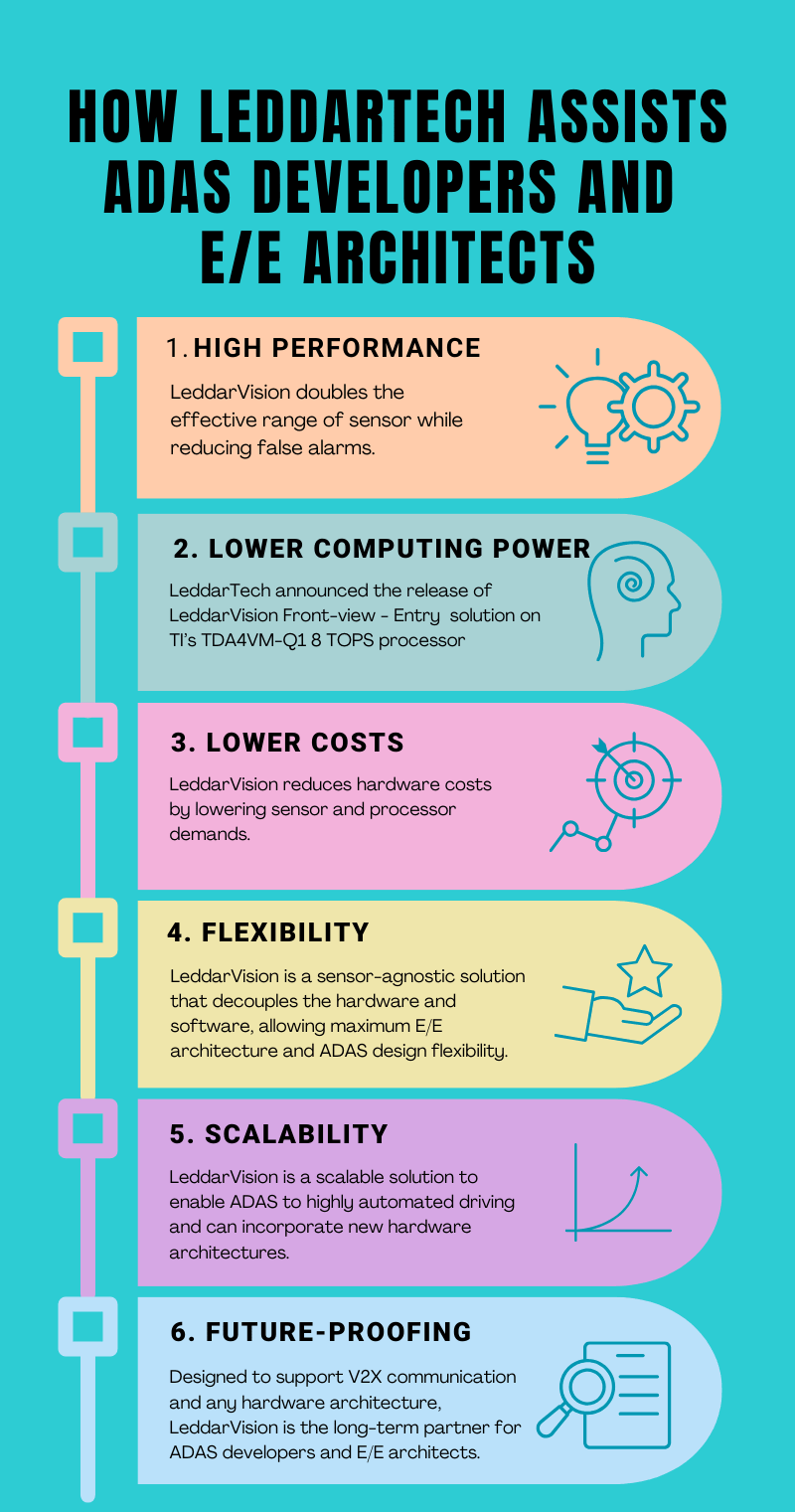

How LeddarTech’s Perception Solutions Assist ADAS/AD Developers and E/E Architects

LeddarVision is an advanced environmental perception solution that utilizes AI-based low-level sensor fusion technology for the automotive market. LeddarVision software provides a comprehensive 3D environment model, delivering superior perception performance from any sensor set. The advanced AI of LeddarVision serves as the key facilitator for this scalability, fostering continuous improvement in perception training from system to system.

In addition to the LeddarVision software, LeddarTech also supports customers seeking specific perception systems that enable ADAS capabilities with preconfigured LeddarVision products for front-view (LVF) and surround-view (LVS-2+) perception. The LeddarVision product family is designed to enable L2/L2+ ADAS and a 5-star safety rating for new car assessment programs (NCAP) and general safety regulations (GSR).

The preceding paragraphs illuminated the challenges faced by E/E architects, encompassing computing power, cost, scalability, flexibility, performance, sensor integration and V2X support. These challenges are pivotal considerations for ADAS developers crafting innovative features. The subsequent paragraphs intricately detail how LeddarVision systematically tackles each of these challenges.