Download White Paper in PDF Format

Fascinating World of ADAS and AD

The advanced driver assistance system (ADAS) and autonomous driving (AD) market is expected to reach $42Bn by 2030, growing at a compounded annual growth rate of 11% between 2020-2030, as per McKinsey’s “Outlook on the automotive software and electronics market through 2030” report. The ADAS & AD market can be subdivided into three categories: environmental perception, functional integration and prediction & planning. Environmental perception is the foundational technology on which ADAS & AD are built. Driven by increasing consumer appetite, regulatory requirements and industry transition, ADAS & AD are becoming central to the automotive ownership and manufacturing experience.

This white paper is intended to provide an overview of the technologies involved in enabling ADAS & AD, the different levels of vehicle automation and the factors influencing the adoption of automated vehicles. For more deeper insights, explore some other white papers from LeddarTech:

- Cybersecurity in ADAS: Protecting Connected and Autonomous Vehicles

- A Comprehensive Overview of China-NCAP 2024 Regulations

- Hype to Highway: Today’s Opportunities in Automated Driving

- An Explanation of Perception Performance in ADAS and AD

- Exploring the Relationship Between ADAS, E/E Architecture and Perception Systems

Self-driving vehicles can travel from point A to point B without human intervention. The last few decades have witnessed a surge in interest in developing autonomous vehicles to improve road safety, reduce accidents and provide a superior mobility experience to all commuters. Autonomous vehicles come in different shapes, sizes, markets and technological capabilities.

Five Levels of Autonomous Vehicles

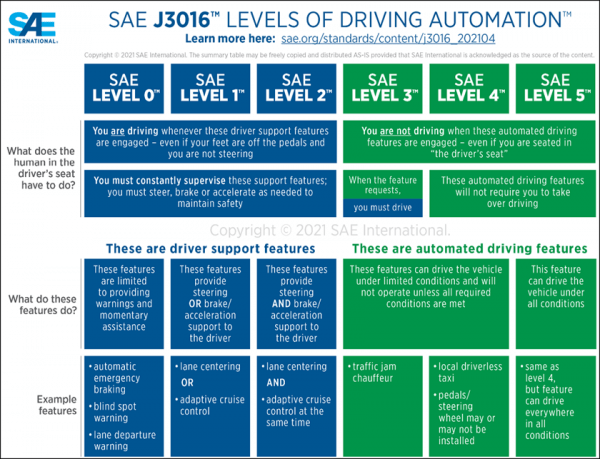

Society of Automotive Engineers (SAE) has categorized driving automation into six levels, spanning from Level 0 (no driving automation) to Level 5 (full driving automation). Each of these levels is explained below:

- Level 0 (no automation): At this level, the vehicle’s system provides no control over its dynamics; it remains entirely under the driver’s manual control.

- Level 1 (driver assistance): In this category, specific control functions such as steering or acceleration/deceleration are automated, but not simultaneously. An example is adaptive cruise control (ACC).

- Level 2 (partial automation): At this stage, the vehicle takes charge of steering and acceleration/deceleration based on information about the driving environment. Nevertheless,

the human driver must remain actively engaged, supervising the system and ready to assume full control when prompted or in response to system limits or failures. - Level 3 (conditional automation): The vehicle can execute all aspects of the dynamic driving task within specific conditions, such as highway driving. It will prompt human intervention when these conditions are not met. While the driver must be available to take over, continuous monitoring of the environment is not mandatory.

- Level 4 (high automation): The vehicle can independently achieve all driving tasks within specific conditions, such as geofenced areas or road types. No human driver intervention is expected in these predefined environments, and no continuous driver attention is necessary.

- Level 5 (full automation): This is the highest level of automation, where the vehicle can perform all driving functions under all conditions. The vehicle is designed to be fully autonomous and operate without the need for a human driver.

Every level represents a significant step in integrating and advancing intricate systems, encompassing sensor fusion, machine learning, sophisticated decision-making algorithms and robust fail-safe mechanisms, pivotal for ensuring the safety and efficiency of autonomous and assisted vehicle operations.

Levels 1 and 2 are considered advanced driver assistance systems (ADAS), wherein the on-board vehicle technology is intended to augment the driver’s capabilities instead of taking over the driving task. Level 3 is also known as conditional automated driving systems, while levels 4 and 5 are also known as highly automated driving systems.

Figure 1 – The different levels of driving automation as defined by SAE International

Almost all new passenger vehicles sold in North America are equipped with Level 1 capabilities, while most are equipped with Level 2 capabilities. In Asia and Africa, the fitment rates differ based on the specific country. Most new vehicles sold in countries like Japan, China and Korea are equipped with level 1 and 2 capabilities. In contrast, new vehicles sold in many African countries might only be equipped with level 1 capabilities. Only a handful of on-road passenger vehicles are level 3 certified and commercially available for purchase to the public. Level 3 represents the best technology available for public purchase today. Companies like Waymo, Cruise and Baidu operate vehicles with technology that meets level 4 requirements. However, these vehicles are not available for purchase. Level 4 vehicles can operate all driving tasks within a certain area and conditions. These vehicles are inhibited by technological limitations, proving a key barrier in enabling the widespread deployment of fully autonomous vehicles. As such, it can be concluded that there are no level 5 autonomous vehicles on the road today.

Growing Importance of Scalability

The path to autonomous driving goes through ADAS. The development of AD systems is a gradual process with incremental technical advancements and breakthroughs. Additionally, the market might not be ready for AD vehicles only, as many drivers and consumers will be skeptical of handing over the control of their vehicle to a machine. As such, scalability is critical in enabling ADAS & AD. Technology providers that can scale their solution from Level 2 ADAS to Level 5 AD are likely to be the preferred partners by car manufacturers. Minimized rework, faster development cycles, quick feature additions & fixes and quick time-to-market are key benefits to car manufacturers as they embark on their journey of providing automated driving features. LeddarTech’s AI-based technology is uniquely positioned to enable car manufacturers to develop their vehicles based on a single LeddarVision platform that provides front-to-surround-view environmental perception capabilities by adding sensors and recalibration.

Technology Driving ADAS and AD

The underlying technology between advanced driver assistance systems (ADAS) and autonomous driving (AD) systems remains the same. However, as one moves from ADAS to AD systems, the technology’s complexity, output, and requirements change. This inability to scale is a major challenge in enabling mass deployment of ADs.

The technology required to enable ADAS and AD systems can be broken down into four main categories:

- Sensing: Sensors such as cameras, lidar, radar and ultrasonic sensors gather real-time data about the vehicle’s environment. They provide crucial inputs for detecting objects, road conditions, and other factors necessary for safe navigation. High-resolution sensors must function reliably across diverse conditions, including low light, rain, and fog to ensure consistent performance.

- Perception: Once the sensors have provided the data, the data runs through perception algorithms to detect objects, lanes, and the vehicle’s surroundings. Perception systems use artificial intelligence and computer vision to identify and track objects like pedestrians, vehicles, and road signs. These systems are essential for understanding the dynamic environment and predicting potential hazards. By leveraging high-performance object detection models, perception technologies ensure the vehicle can respond appropriately to complex scenarios such as crowded intersections or fast-moving traffic.

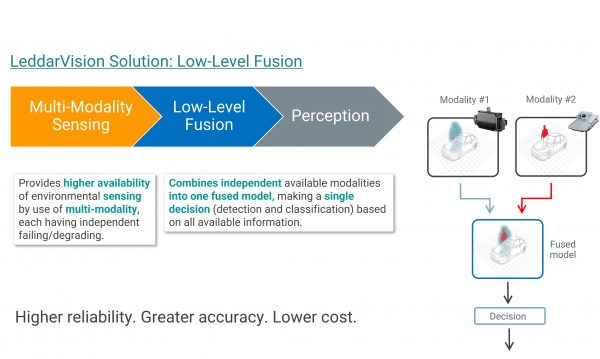

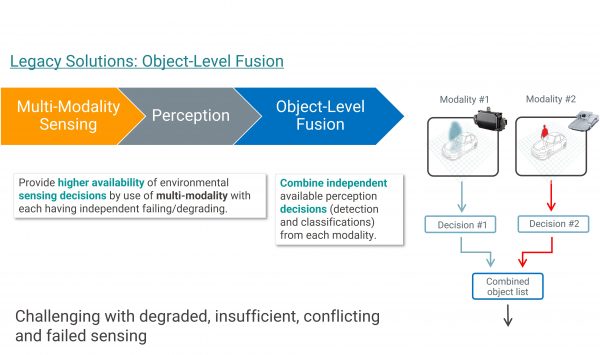

- Fusion: Fusion refers to combining outputs from multiple sources. There are two approaches to fusion: object-level and low-level. Object-level fusion refers to running perception algorithms on each sensor individually and then combining the outputs of the sensors. Low-level or raw data fusion refers to combining the raw data from each sensor before running the perception algorithms on the combined raw data.Currently, most sensor fusion solutions use object-level fusion, wherein each sensor (e.g., radar, camera, LiDAR), with its inherent limitations, identifies and classifies objects individually. This results in poor performance and is not optimal because no single sensor can detect all objects under all conditions. Furthermore, when sensor data is not fused, the system may get contradicting inputs from sensors and cannot determine the next action with a degree of certainty.

Figure 2 – Illustration of the object-level fusion process

Low-level fusion offers many advantages over object-level fusion. It has inherent system redundancy because it is not dependent on any one sensor. Additionally, the limitations of one sensor (such as low LiDAR range) can be compensated by another sensor (such as radar). Low-level fusion also enables the user to scale the same system from ADAS to AD applications, reducing R&D efforts, time-to-market and rework efforts.

- Localization: Localization determines the vehicle’s precise position in its environment, a critical aspect of autonomous navigation. Combining GPS, high-definition maps, and inertial measurement units (IMUs), localization systems provide sub-centimeter accuracy. These systems must also function in GPS-denied environments, such as tunnels or urban canyons, ensuring consistent and reliable navigation under all conditions.

- Path Planning: Path planning refers to developing an autonomous driving route from point A to point B. The road environment is dynamic, so the path-planning algorithm must be adaptable and robust to handle such changes while also considering legal and ethical implications for decision-making.

- Control: Control systems translate the decisions made by the vehicle’s AI into physical actions such as steering, acceleration, and braking. These systems rely on drive-by-wire technology and advanced actuators to execute high-precision and responsiveness commands. Control systems are equipped with fail-safe mechanisms and redundancy to ensure reliability, which is vital for maintaining safety in critical situations.

The role of perception systems is pivotal for ADAS and AD developers, who strive to boost system reliability and performance. When assessing the performance of perception systems, automotive OEMs and Tier 1s must consider factors such as false alarms, object separation capability at large distances, occluded object detection ability, perception range for a given sensor set and performance in adverse conditions. Sensor fusion and perception systems that excel in all these aspects empower automotive OEMs and Tier 1s to develop ADAS and AD systems that enhance the mobility experience, operate reliably and achieve a 5-star performance in safety tests. LeddarTech’s proprietary AI-based low-level sensor fusion and perception technology, available on an embedded processor, is at the forefront of facilitating the widespread adoption of ADAS and AD systems.

Factors Influencing ADAS and AD Development

Autonomous vehicles were promised to become widely available by now, but this has not materialized. Advanced driver assistance systems (ADAS) are quickly growing in stature, importance, and need, while autonomous driving systems are becoming a longer-term goal. ADAS is a key want and a safety prerequisite. There are many factors influencing the development and greater adoption of ADAS and AD functions, some of which are explained below:

-

- Legal Factors: As a safety enhancer, ADAS applications are a legal necessity in certain places. Europe’s general safety regulations (GSR) require new vehicles to be equipped with ADADAS safety features such as automatic emergency braking (car-to-car, car-to-pedestrian and car-to-motorbike scenarios), emergency lane keeping and blind spot information systems. Similarly, the USA’s NHTSA and China’s NCAP programs extensively test the ADAS applications on new vehicles, based on which they provide a safety rating for the vehicle.

- Political Factors: The regulations governing AD development, deployment and testing are political decisions. The different approaches of various states and nations exhibit this. China and Korea have been leaders in Asia. They’ve encouraged AD testing by granting licenses and creating special zones and infrastructure enabling real-world AD technology testing. In Europe, policies like the General Data Protection Regulation (GDPR) influence how ADs handle data, while stringent emissions targets encourage the integration of electric and autonomous technologies. Furthermore, national governments offer incentives for AD testing and infrastructure upgrades. In North America, different states have taken different approaches. Some states leading to AD adoption are Georgia, California, and Arizona, with Nevada also giving licenses for AD deployment and consumer-paid rides.

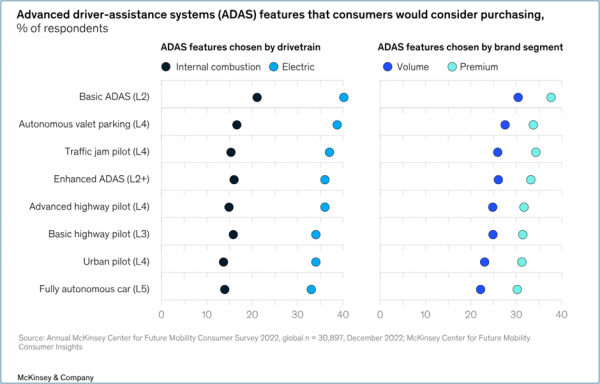

- Consumer Trends: Consumers are inclined to purchase vehicles with advanced ADAS features. This is evident in McKinsey’s Hands-off: Consumer perceptions of advanced driver assistance systems report which states “For future purchases, we see a stronger consumer pull for increasing degrees of driving assistance and technology-enabled autonomy in their next cars, especially as more people seek electrified mobility options. Only 5 percent of electric vehicle (EV) buyers say they do not want any ADAS features in their cars; in the premium segments, that figure falls to less than 1 percent of consumers”.

Figure 4 – ADAS features that consumers would consider purchasing, % of respondents

(source: McKinsey’s Report on Consumer Perceptions of ADAS)

- Economic Trends: Faced by aggressive Chinese OEMs that are expanding their reach to European and North American markets, slow down in EV sales, new environmental targets by cities, provinces, and federal governments and fewer new vehicles sold, OEM executives are under unprecedented pressure to revive their companies and forge a path to success. Many OEMs have spent billions of dollars over many years in developing autonomous vehicles. With ADs still far out, OEMs are pivoting to providing their customers with advanced ADAS features that allow OEMs to generate revenue immediately.

The LeddarTech Opportunity

LeddarTech is a leader in environmental perception technology, driven by its innovative LeddarVision software, an AI-based platform delivering high accuracy, cost efficiency, and scalability to automotive OEMs and Tier 1s. Automotive original equipment manufacturers (OEMs) and Tier 1s often struggle with high system costs, integration complexity, long development timelines, and ensuring scalability across vehicle platforms. LeddarTech’s AI-driven LeddarVision software solves these problems by delivering high performance with fewer sensors and lower computing power, reducing overall costs.

With real-world demonstrations and overwhelmingly positive industry feedback, LeddarTech is uniquely positioned to enable OEMs and Tier 1s to develop and deploy advanced ADAS & AD features. The solution, LeddarVision, is further validated by the close partnerships that LeddarTech has with two industry giants, Texas Instruments and Arm. LeddarTech’s collaboration with Texas Instruments integrates LeddarVision with TI’s TDA4 processors, creating a pre-validated ADAS/AD solution that reduces complexity, cost, and time-to-market for OEMs and Tier 1 suppliers. This partnership is backed by an advanced royalty payment of nearly 10 M USD from TI, signaling strong market confidence in LeddarVision.

Similarly, LeddarTech and Arm have been collaborating to enhance the efficiency of the solution. By optimizing critical performance-defining algorithms within the ADAS perception and fusion stack for Arm CPUs, Arm and LeddarTech have successfully minimized computational bottlenecks and enhanced overall system efficiency using the Arm Cortex-A720AE CPU. Take a closer look at this groundbreaking collaboration. This Case Study provides a detailed account of the two organizations’ journey as they push the boundaries of innovation and pioneer the future of automotive safety.

This White Paper does not constitute a reference design. The recommendations contained herein are provided “as is” and do not constitute a guarantee of completeness or correctness. LeddarTech® has made every effort to ensure that the information contained in this document is accurate. Any information herein is provided “as is.” LeddarTech shall not be liable for any errors or omissions herein or for any damages arising out of or related to the information provided in this document. LeddarTech reserves the right to modify design, characteristics and products at any time, without notice, at its sole discretion. LeddarTech does not control the installation and use of its products and shall have no liability if a product is used for an application for which it is not suited. You are solely responsible for (1) selecting the appropriate products for your application, (2) validating, designing and testing your application and (3) ensuring that your application meets applicable safety and security standards. Furthermore, LeddarTech products are provided only subject to LeddarTech’s Sales Terms and Conditions or other applicable terms agreed to in writing. By purchasing a LeddarTech product, you also accept to carefully read and to be bound by the information contained in the User Guide accompanying the product purchased.