A phantom braking incident here, a long-distance drive made easy there –lowering consumer confidence in autonomous vehicles (AV) but increasing adoption of advanced driver assistance systems (ADAS). These are some of the challenges that ADAS developers navigate. These trends drive the automated and assisted mobility experience, with the perception system at its core. As ADAS developers seek to enhance the reliability of ADAS system performance, understanding how to evaluate perception systems is crucial.

Welcome to the dynamic landscape of sensor fusion and perception systems, where a convergence of technologies creates a holistic view of the environment. In the fast-evolving world of ADAS, the seamless amalgamation of data from diverse sensors is a necessity. Each sensor, with its unique strengths, contributes to a unified, reliable and enriched perception, which is essential for the safety and efficacy of ADAS. But here’s the kicker: truly harnessing the power of these complex systems requires a profound understanding of their performance.

Understanding the various aspects of perception performance is critical to craft superior ADAS features with exceptional capabilities. For ADAS developers, this task extends beyond merely keeping pace with industry trends; it involves setting them. This White Paper explores the various facets of performance in sensor fusion and perception systems, unpacking how this knowledge is integral to developing robust and innovative ADAS solutions that resonate with today’s technological advancements and industry demands. Below are the key performance indicators (KPIs) that ADAS developers must examine when evaluating a sensor fusion and perception system.

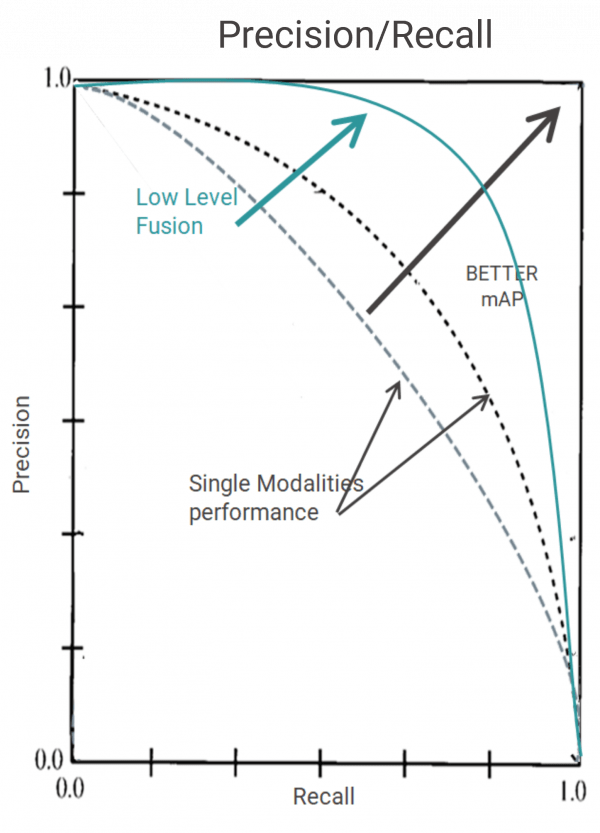

1. False alarms: False alarms within the context of sensor fusion and perception refer to the perception system producing an output that does not exist. For example, if the sensor fusion and perception system detects an object in the scene that does not actually exist, this is a false positive. Conversely, if the perception system fails to detect an object that actually exists, this is known as a false negative. False positives are also sometimes referred to as “ghosts.”

False alarms are critical KPIs. High false alarms cause the maloperation of ADAS systems, such as the automatic emergency braking (AEB) system not activating when it should (false negative) or phantom braking incidents (false positives). Read “Evaluating Perception Systems: A Guide to Precision, Recall and Specificity” to better understand false alarms.

LeddarTech’s AI-based low-level sensor fusion and perception technology is tuned for better recall and precision, resulting in fewer false alarms. This means that ADAS developers can be more confident in their systems, and end-users –drivers and passengers– are more comfortable and trusting of the ADAS features in the vehicle.

Figure 1- AI-based LeddarVision pushes the performance envelope resulting in higher precision and recall

Figure 1- AI-based LeddarVision pushes the performance envelope resulting in higher precision and recall

2. Object separation and occluded object detection: How effectively can the sensor fusion and perception system differentiate objects at large distances, particularly in highway ADAS applications? A camera alone cannot differentiate two objects at significant distances due to low resolution. Similarly, radar cannot differentiate two adjacent objects driving at the same speed. LeddarVision’s low-level fusion accurately identifies the two objects as two distinct entities using the same sensor set at distances exceeding 150 m. At significant distances, object separation enables precise lane allocation for vehicles in front of the ego-vehicle, thereby supporting adaptive cruise control (ACC) at higher velocities.

Another key consideration when evaluating a perception system is whether it can detect occluded objects. In urban conditions, can the perception system detect and track a pedestrian on the sidewalk looking to cross the road? Will the perception system track a person hidden behind parked vehicles on the side? This functionality is crucial for enabling safe ADAS applications and is essential for protecting vulnerable road users (VRUs). Occluded object detection significantly enhances AEB performance in “cut-out” use cases, which are part of the Euro NCAP ADAS tests.

3. Range: Reliable and continuous detection and tracking at extended ranges allows for highway assist features at higher velocities and at more challenging operational design domains (ODDs), where the breaking profile is less forgiving (e.g., wet road conditions). The range is an important consideration for perception systems in ADAS and AD for several reasons:

LeddarTech’s AI-based low-level sensor fusion and perception technology, LeddarVision™, extends the effective perception range. Compared to traditional and prevalent object-level fusion, LeddarVision can up to double the effective range using the same sensor set. The LeddarVision Front-View – Entry (LVF-E) software stack doubles the supported object detection range to over 150 m using one 1- 2 Mpx front camera and two short-range front corner radars.

Similarly, the LeddarVision FrontView – High (LVF-H) and LeddarVision Surround-View – Premium (LVS-2+) extend the object detection range to over 200 meters. Early detection is crucial for vehicles to achieve a 5- star rating in new car assessment programs (NCAP), especially as specified in Euro NCAP 2025.

4. Operation in adverse conditions: ADAS and AD systems must operate in various weather conditions throughout the year, not just in ideal conditions. If the perception system fails in rain, snow or other adverse conditions, it may render the vehicle inoperable, limiting its usability and practicality. A perception system that functions effectively in adverse conditions can better detect and respond to obstacles, other vehicles and pedestrians, reducing the risk of accidents. On the other hand, if the sensor fusion and perception system is unable to do so, this will negatively impact consumer confidence in ADAS and AD systems, further straining the relationship between end users and partially and fully automated vehicles.

Each sensor (camera, radar, LiDAR) has limitations. The camera does not perform well in adverse conditions such as low light, dusty environments, rain, fog or snow. Similarly, the radar and LiDAR’s resolution at higher distances poses a challenge. A perception solution based on object-level fusion suffers from the individual sensor weaknesses as it fuses the output of the individual sensor perception output. However, low-level fusion solutions fuse the raw data of all sensors before applying perception algorithms to the resulting comprehensive dataset. This approach allows low-level sensor fusion and perception solutions, such as LeddarVision, to mitigate individual sensor weaknesses by leveraging other sensors’ strengths.

Furthermore, low-level fusion and perception solutions do not suffer from sensor contradictions. In adverse conditions, while the camera might fail to detect an object, the radar can still identify an object at the same location. Perception systems based on object-level fusion will need to decide whether the object exists and which sensor is providing the correct output. Since LeddarVision fuses raw sensor data, it has no sensor contradictions.

LeddarVision demonstrates robust performance in adverse conditions, detecting, tracking and classifying objects under direct sunlight, at night or dimly lit areas, in rain, snow, fog and dusty environments when sensors may be degraded or rendered inoperable. Click the following image to watch LeddarVision in action, navigating challenging environments.

This White Paper does not constitute a reference design. The recommendations contained herein are provided “as is” and do not constitute a guarantee of completeness or correctness. LeddarTech® has made every effort to ensure that the information contained in this document is accurate. Any information herein is provided “as is.” LeddarTech shall not be liable for any errors or omissions herein or for any damages arising out of or related to the information provided in this document. LeddarTech reserves the right to modify design, characteristics and products at any time, without notice, at its sole discretion. LeddarTech does not control the installation and use of its products and shall have no liability if a product is used for an application for which it is not suited. You are solely responsible for (1) selecting the appropriate products for your application, (2) validating, designing and testing your application and (3) ensuring that your application meets applicable safety and security standards. Furthermore, LeddarTech products are provided only subject to LeddarTech’s Sales Terms and Conditions or other applicable terms agreed to in writing. By purchasing a LeddarTech product, you also accept to carefully read and to be bound by the information contained in the User Guide accompanying the product purchased.

In the ever-evolving landscape of automated and assisted mobility, the role of perception systems is pivotal. This is particularly relevant for ADAS and autonomous driving developers, who strive to boost system reliability and performance. When assessing the performance of perception systems, automotive OEMs and Tier 1s must consider factors such as false alarms, object separation capability at large distances, occluded object detection ability, perception range for a given sensor set and perception performance in adverse conditions. Sensor fusion and perception systems that excel in all these aspects empower automotive OEMs and Tier 1s to develop ADAS systems that enhance the mobility experience, operate reliably and achieve a 5-star performance in NCAP testing, meeting the requirements outlined in Euro NCAP 2025. LeddarTech’s proprietary low-level sensor fusion and perception technology, available on an embedded processor, is at the forefront of facilitating the widespread adoption of ADAS and AD systems.

This White Paper does not constitute a reference design. The recommendations contained herein are provided “as is” and do not constitute a guarantee of completeness or correctness. LeddarTech® has made every effort to ensure that the information contained in this document is accurate. Any information herein is provided “as is.” LeddarTech shall not be liable for any errors or omissions herein or for any damages arising out of or related to the information provided in this document. LeddarTech reserves the right to modify design, characteristics and products at any time, without notice, at its sole discretion. LeddarTech does not control the installation and use of its products and shall have no liability if a product is used for an application for which it is not suited. You are solely responsible for (1) selecting the appropriate products for your application, (2) validating, designing and testing your application and (3) ensuring that your application meets applicable safety and security standards. Furthermore, LeddarTech products are provided only subject to LeddarTech’s Sales Terms and Conditions or other applicable terms agreed to in writing. By purchasing a LeddarTech product, you also accept to carefully read and to be bound by the information contained in the User Guide accompanying the product purchased.

A high-performance, sensor-agnostic automotive-grade perception solution that delivers highly accurate 3D environmental models using low-level sensor fusion. Its raw data fusion technology detects very small obstacles on the road with better detection rates and fewer false alarms than legacy “object fusion” solutions.

Discover the strategic intricacies of sensor fusion for constructing precise environmental models in this webinar. Acquire an incisive understanding of the implications of sensor fusion on the quality of environmental models, performance, cost, scalability and adaptability.

The backbone on which the software-defined vehicle transformation takes place is the electrical and/or electronic (E/E) architecture of the vehicle. This white paper will delve into the nitty-gritty details of how sensor fusion and perception systems and ADAS influence modern vehicles’ E/E architecture.

For car manufacturers and their suppliers, ADAS is a double-edged sword – while it incurs substantial investment costs for research and development, it also presents a significant revenue stream. This White Paper explains what ADAS and AD developers must consider when selecting partners and solutions.

Note: This White Paper does not constitute a reference design. The recommendations contained herein are provided “as is” and do not constitute a guarantee of completeness or correctness.